Related to the discussion thread about what we look at when we listen:

One thing we already have working in our SpokenWeb interface is a graphic representation of the sound wave as a linear wave form. The wave form is one kind of audio signal, basically the representation of sound waves in a different form. Where does this manner of representing sound come from?

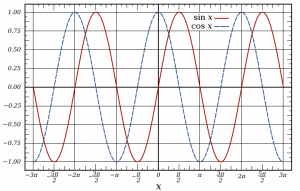

The Sine Wave.

Joseph Fourier’s discovery in 1822 that sinusoidal waves can describe waveforms is probably a key place to start. You can find the actual formula on Wikepedia, but it’s enough to say that it has proven very useful for the analysis of time series patterns and is thus really good for sound analysis and signal processing. Basically, the sine wave describes repetitive oscillation. When used for representing sound it shows us amplitude (what we generally refer to as volume or loudness), frequency (how many oscillations in a given time (which we hear as ‘high pitch’ or ‘low pitch’ sounds, high ones having more frequent oscillations than low ones), and phrases (when a cycle begins and ends, based upon the presence or absence of detected oscillation).

Here’s what a basic sine wave looks like (this is at the heart of the digital wave forms we’re used to looking at these days):

There are other kinds of waveforms, of course. Sine waves are oscillating round forms. There are square, triangle, sawtooth wave forms, as well.

The wave form, in principle, is a graphic representation in another medium of the patterns produced by sound in air. Some very basic modes of transcribing these patterns were developed in the 1860s, when inventor Léon Scott developed what he called the phonautograph (1859), which basically consisted of a sound barrel to speak into, a diaphram membrane which responded to the vibrations created by the voice, a stylus (connected to the membrane) to record the vibrations on smoke-blackened paper or glass.

![]() Scott hoped he’d be able to figure out how to read or decode the squiggles that he saw the voice produce on paper; but that wasn’t possible. More recently, however, researchers participating in the First Sounds project have managed to make some of Scott’s early phonautograph sheets ‘speak’ through digital sonification. Edison’s phonograph worked according to the same principal as Scott’s phonautograph, only Edison had his stylus inscribe the sound vibrations on tinfoil, and then on wax, which allowed him to re-produce the sound from the original inscriptions, simply by reversing the process and having the stylus ‘play back’ the inscribed vibrations (again via a membrane) through a horn (or loud speaker).

Scott hoped he’d be able to figure out how to read or decode the squiggles that he saw the voice produce on paper; but that wasn’t possible. More recently, however, researchers participating in the First Sounds project have managed to make some of Scott’s early phonautograph sheets ‘speak’ through digital sonification. Edison’s phonograph worked according to the same principal as Scott’s phonautograph, only Edison had his stylus inscribe the sound vibrations on tinfoil, and then on wax, which allowed him to re-produce the sound from the original inscriptions, simply by reversing the process and having the stylus ‘play back’ the inscribed vibrations (again via a membrane) through a horn (or loud speaker).

There are other ways to represent the sound wave through direct mechanical, physical means. Stylus vibrations are one, flames manipulated by air pressure are another that have been experimented with. In 1858, John Le Conte discovered that flames were sensitive to sound. Rudolph Koenig (1862) discovered that the height of a flame changes by transmitting sound into the gas supply of a burner. Heirich Rubens to a long tube, drilled lots of holes into it at consistent intervals and filled it with flammable gas. He ignited the gas, which created a long rod of consistently tall flames and then demonstrated that sound produced at one end of the tube creates a standing wave, mirroring in fire the wavelength of the sound being transmitted in air. Here’s what a Rubens Tube looks like.

Someone should create a digital Rubens tube, if it hasn’t been done already.

In moving from the acoustic to the electric era of sound recording, we are moving closer to the problem of the waveform that we are facing. The microphone is a conversion apparatus, really: it converts the sound wave in air (air pressure) into an electronic signal (voltage) that approximates the patterns of the original sound vibration. Once the original sound wave has been converted into an electronic signal, it can be represented, via an Oscilloscope, for example, as a graphic pattern. The Oscilloscope shows the varying signal voltages which are, in turn, representations of the original sound signal. The Oscilloscope generally uses the Sine wave as its basis for graphic representation.

Digital representations of the sound wave are basically taking conversion one step further, so we have the electronic signal converted into digital information, and that in turn is converted into a digital graphic representation of a wave form. While digital audio software tends to be modelled, still, on the graph lines of the old Sine Wave model, there can be great variation in what the wave form can look like (different colors, etc.), and, in some sound analysis software, there can be visual representation of differentiated aspects of sound extracted from the wave.

Here is a typical digital wave form as represented in the terrific open source software Audacity:

Here are some other elements of the sound wave represented in the phonetics software Praat, a tool for linguists who are interested in analyzing formants, pitch curves, and such:

There are, theoretically, no limits to how we can choose to represent the wave form digitally. Some ways are more useful than others. Some are more beautiful than others.

Here is an artist’s rendering of the sound wave moving through air. This work is entitled, “The Balance”—a visual representation of two opposite audio waves—by Romantas Lukavicius (2009). I have no idea how the CGI works, but it is pretty beautiful, visualizing sound as a kind of organic textile.

Look at/listen to “The Balance.